21. A source produces three symbols A, B and C with probabilities P(A) = $$\frac{1}{2}$$, P(B) = $$\frac{1}{4}$$ and P(C) = $$\frac{1}{4}$$. The source entropy is

22. Consider a discrete memoryless source with source alphabet S = {s0, s1, s2} with probabilities P(s0) = $$\frac{1}{4}$$, P(s1) = $$\frac{1}{4}$$ and P(s2) = $$\frac{1}{2}$$

The entropy of the source is

The entropy of the source is

23. According shanon-hartley law at no noise the channel capacity is going to be

24. Which of the following statements is correct?

S1: Channel capacity is the same for two binary symmetric channels with transition probabilities as 0.1 and 0.9.

S2: For a binary symmetric channel with transition probability 0.5, the channel capacity is 1.

S3: For AWGN channel with infinite bandwidth, channel capacity is also infinite.

S4: If Y = g(X), then H(Y) > H(X).

S1: Channel capacity is the same for two binary symmetric channels with transition probabilities as 0.1 and 0.9.

S2: For a binary symmetric channel with transition probability 0.5, the channel capacity is 1.

S3: For AWGN channel with infinite bandwidth, channel capacity is also infinite.

S4: If Y = g(X), then H(Y) > H(X).

25. A source generates three symbols with probabilities 0.25, 0.25, 0.50 at a rate of 3000 symbols per second. Assuming independent generation of symbols, the most efficient source encoder would have average bit is

26. Source encoding in a data communication system is done in order to

27. A communication channel is having a bandwidth of 3000 Hz. The transmitted power is such that the received signal-to-noise ratio is 1023. The maximum data rate that can be transmitted t error-free through the channel is:

28. In a binary system, the symbols 0 and 1 occur with a probability of p0 and p1 respectively. The maximum value for entropy occurs when:

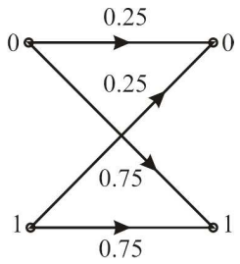

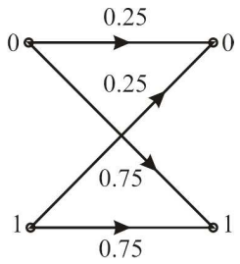

29. Consider a binary memoryless channel characterized by the transition probability diagram shown in the figure.

The channel is

The channel is

30. A random experiment has 64 equally likely outcomes. Find the information associated with each outcome.

Read More Section(Information Theory and Coding)

Each Section contains maximum 100 MCQs question on Information Theory and Coding. To get more questions visit other sections.