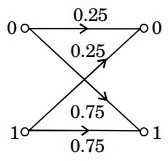

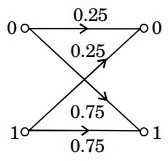

1. Consider a binary memoryless channel characterized by the transition probability diagram shown in the figure. The channel is

2. While decoding the cyclic code, if the received code word is similar as transmitted code word, then r(x) mod g(x) is equal to . . . . . . . .

3. For a received sequence of 6 bits, which decoding mechanism deals with the selection of best correlated sequence especially by correlating the received sequence and all permissible sequences?

4. Binary Huffman coding is a

5. In discrete memoryless source, the current letter produced by a source is statistically independent of . . . . . . . .

6. The capacity of a binary symmetric channel, given H(P) is binary entropy function is

7. When the channel is noisy, producing a conditional probability of error p = 0.5; the channel capacity and entropy function would be, respectively.

8. A source generates three symbol m0, m1, m2 with probabilities 0.5, 0.25, 0.5, then find entropy?

9. Shannon's law relates which of the following?

10. A source produce 26 symbols with equal probability. What is the average information produced by this source?

Read More Section(Information Theory and Coding)

Each Section contains maximum 100 MCQs question on Information Theory and Coding. To get more questions visit other sections.